FSI & Banking platform with Databricks Data Intelligence Platform - Fraud detection in real time

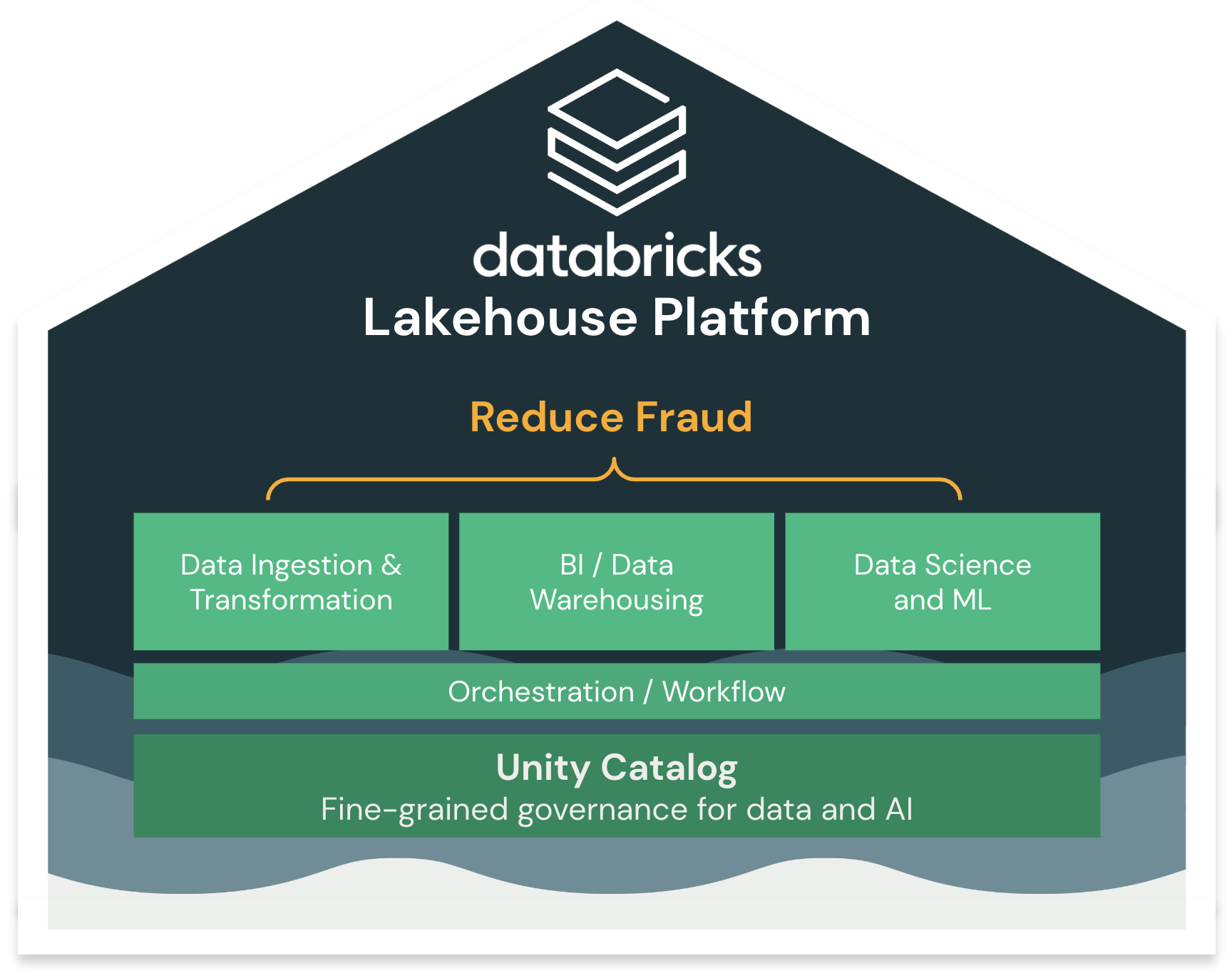

What is The Databricks Data Intelligence Platform for Banking?

It's the only enterprise data platform that allows you to leverage all your data, from any source, on any workload to optimize your business with real time data, at the lowest cost.

The Lakehouse allows you to centralize all your data, from customer & retail banking data to real time fraud detection, providing operational speed and efficiency at a scale never before possible.

Simple

One single platform and governance/security layer for your data warehousing and AI to **accelerate innovation** and **reduce risks**. No need to stitch together multiple solutions with disparate governance and high complexity.

Open

Built on open source and open standards. You own your data and prevent vendor lock-in, with easy integration with external solutions. Being open also lets you share your data with any external organization, regardless of their data stack/vendor.

Multicloud

One consistent data platform across clouds. Process your data where you need.

Reducing Fraud with the lakehouse

Being able to collect and centralize information in real time is critical for the industry. Data is the key to unlock critical capabilities such as realtime personalization or fraud prevention.

What we'll build

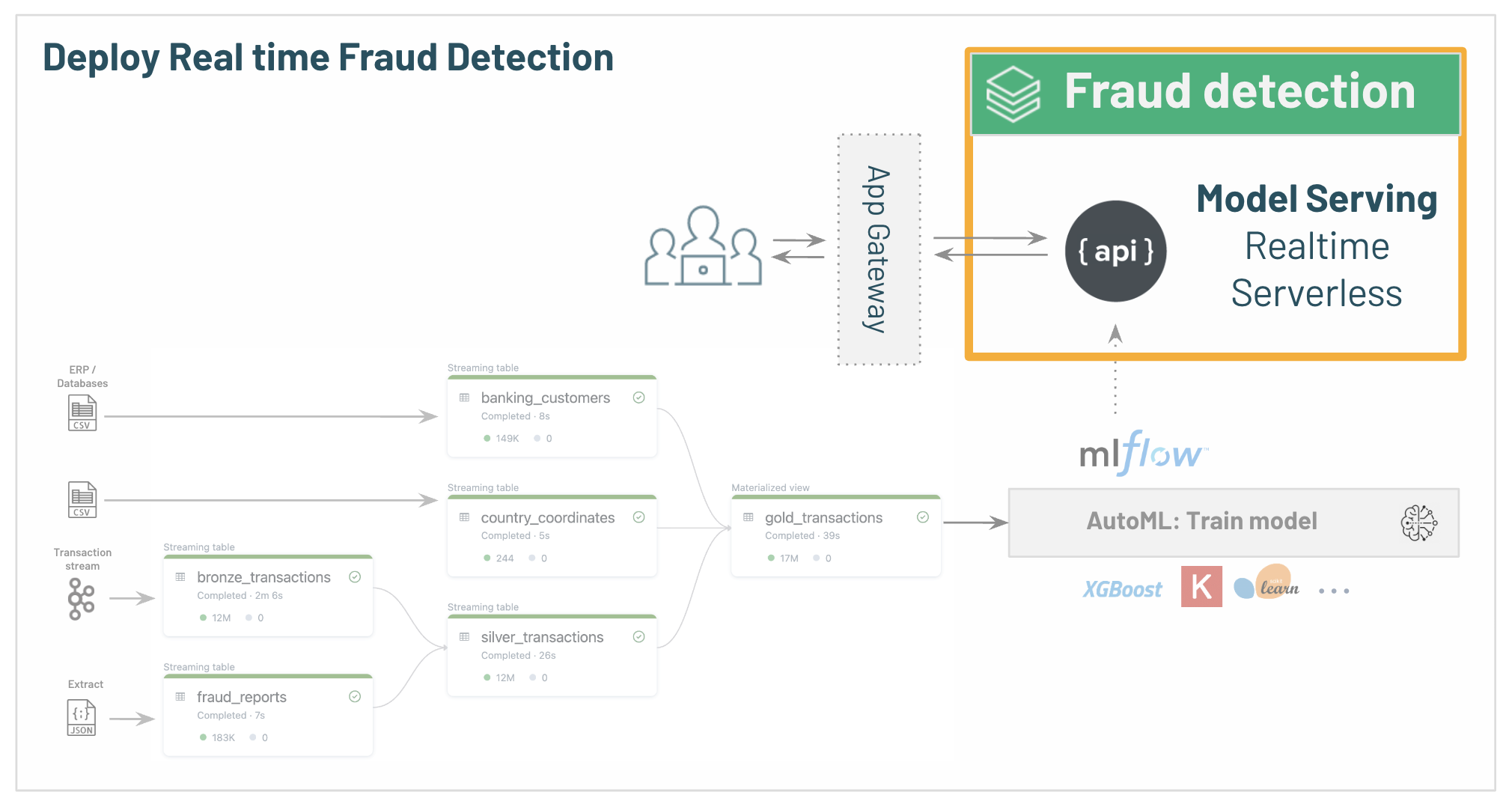

In this demo, we'll build an end-to-end Banking platform, collecting data from multiple sources in real time.

With this information, we'll not only be able to analyse existing past fraud and understand common patterns, but we'll also be able to rate financial transaction risk in realtime.

Based on this information, we'll be able to proactively reduce Fraud. A typical example could be asking for an extra security challenge or having human intervention when our model scores high.

At a very high level, this is the flow we'll implement:

1. Ingest and create our Banking database, with tables easy to query in SQL

2. Secure data and grant read access to the Data Analyst and Data Science teams.

3. Run BI queries to analyse existing Fraud

4. Build ML models & deploy them to provide real-time fraud detection capabilities.

Our dataset

To simplify this demo, we'll consider that an external system is periodically sending data into our blob storage (S3/ADLS/GCS):

- Banking transaction history

- Customer data (profile)

- Metadata table

- Report of past fraud (used as our label)

*Note that at a technical level, our data could come from any source. Databricks can ingest data from any system (Salesforce, Fivetran, message queues like kafka, blob storage, SQL & NoSQL databases...).*

Let's see how this data can be used within the Lakehouse to analyse our customer transactions & detect potential fraud in realtime.

1/ Ingesting and preparing the data (Data Engineering)

Our first step is to ingest and clean the raw data we received so that our Data Analyst team can start running analysis on top of it.

Delta Lake

All the tables we'll create in the Lakehouse will be stored as Delta Lake tables. [Delta Lake](https://delta.io) is an open storage framework for reliability and performance.

It provides many functionalities *(ACID Transactions, DELETE/UPDATE/MERGE, zero-copy clone, Change Data Capture...)*

For more details on Delta Lake, run `dbdemos.install('delta-lake')`

Simplify ingestion with Spark Declarative Pipelines (SDP)

Databricks simplifies data ingestion and transformation with Spark Declarative Pipelines by allowing SQL users to create advanced pipelines, in batch or streaming. The engine will simplify pipeline deployment and testing and reduce operational complexity, so that you can focus on your business transformation and ensure data quality.

Open the FSI Banking & Fraud Spark Declarative Pipelines pipeline or the [SQL notebook]($./01-Data-ingestion/01.1-sdp-sql/01-SDP-fraud-detection-SQL) *(Alternative: SDP Python version will be available soon)*.

For more details on SDP: `dbdemos.install('pipeline-bike')` or `dbdemos.install('declarative-pipeline-cdc')`

2/ Securing data & governance (Unity Catalog)

Now that our first tables have been created, we need to grant our Data Analyst team READ access to be able to start analyzing our banking database information.

Let's see how Unity Catalog provides security & governance across our data assets, including data lineage and audit logs.

Note that Unity Catalog integrates Delta Sharing, an open protocol to share your data with any external organization, regardless of their stack. For more details: `dbdemos.install('delta-sharing-airlines')`

Open [Unity Catalog notebook]($./02-Data-governance/02-UC-data-governance-ACL-fsi-fraud) to see how to setup ACL and explore lineage with the Data Explorer.

3/ Analysing existing Fraud (BI / Data warehousing / SQL)

Our datasets are now properly ingested, secured, high-quality, and easily discoverable within our organization.

Data Analysts are now ready to run BI interactive queries, with low latencies & high throughput, including Serverless data warehouses providing instant stop & start.

Let's see how Data Warehousing can be done using Databricks, including integration with external BI solutions like PowerBI, Tableau and others!

Open the [Data warehousing notebook]($./03-BI-data-warehousing/03-BI-Datawarehousing-fraud) to start running your BI queries, or directly open the Banking Fraud Analysis dashboard.

4/ Predict Fraud risk with Data Science & AutoML

Being able to run analysis on our past data already gave us a lot of insight to drive our business. We can better understand our customer data and past fraud.

However, knowing where we had fraud in the past isn't enough. We now need to take it to the next level and build a predictive model to detect potential threats before they happen, reducing our risk and increasing customer satisfaction.

This is where the Lakehouse value comes in. Within the same platform, anyone can start building ML models to run such analysis, including low-code solution with AutoML.

ML: Fraud Detection Model Training

Let's see how Databricks accelerates ML projects with AutoML: one-click model training with the [04.1-AutoML-FSI-fraud]($./04-Data-Science-ML/04.1-AutoML-FSI-fraud)

ML: Realtime Model Serving

Once our model is trained and available, Databricks Model Serving can be used to enable real time inferences, allowing fraud detection in real-time.

Review the [04.3-Model-serving-realtime-inference-fraud]($./04-Data-Science-ML/04.3-Model-serving-realtime-inference-fraud)

5/ Deploying and orchestrating the full workflow

While our data pipeline is almost completed, we're missing one last step: orchestrating the full workflow in production.

With Databricks Lakehouse, there is no need to manage an external orchestrator to run your job. Databricks Workflows simplifies all your jobs, with advanced alerting, monitoring, branching options, etc.

Open the [workflow and orchestration notebook]($./06-Workflow-orchestration/06-Workflow-orchestration-fsi-fraud) to schedule our pipeline (data ingestion, model re-training etc.)

Conclusion

We demonstrated how to implement an end-to-end pipeline with the Lakehouse, using a single, unified and secure platform:

- Data ingestion

- Data analysis / DW / BI

- Data science / ML

- Workflow & orchestration

As a result, our analyst team was able to simply build a system to not only understand but also forecast future failures and take action accordingly.

This was only an introduction to the Databricks Platform. For more details, contact your account team and explore more demos with `dbdemos.list()`