IoT Platform with Databricks Intelligence Data Platform - Ingesting real-time Industrial Sensor Data for Prescriptive Maintenance

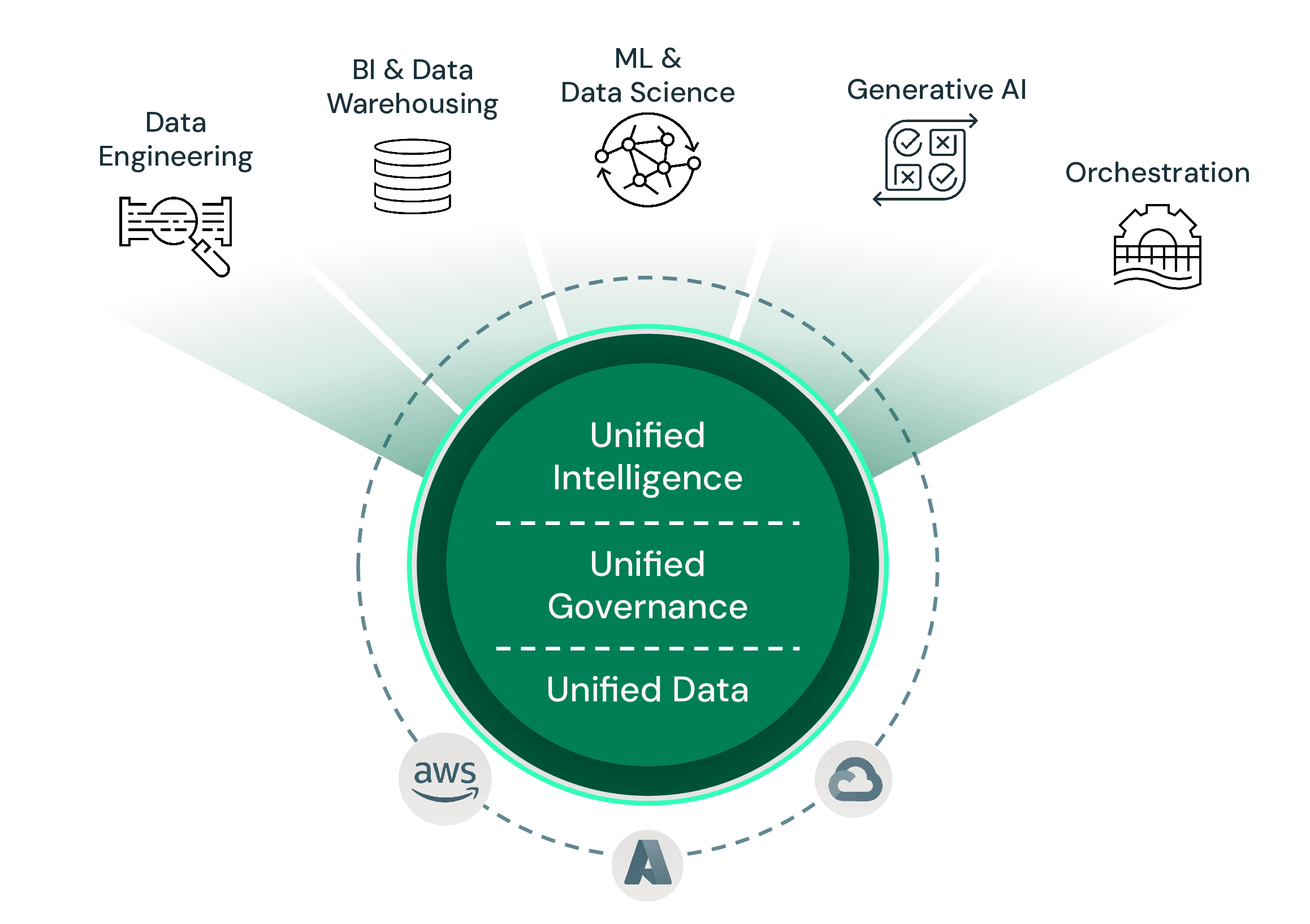

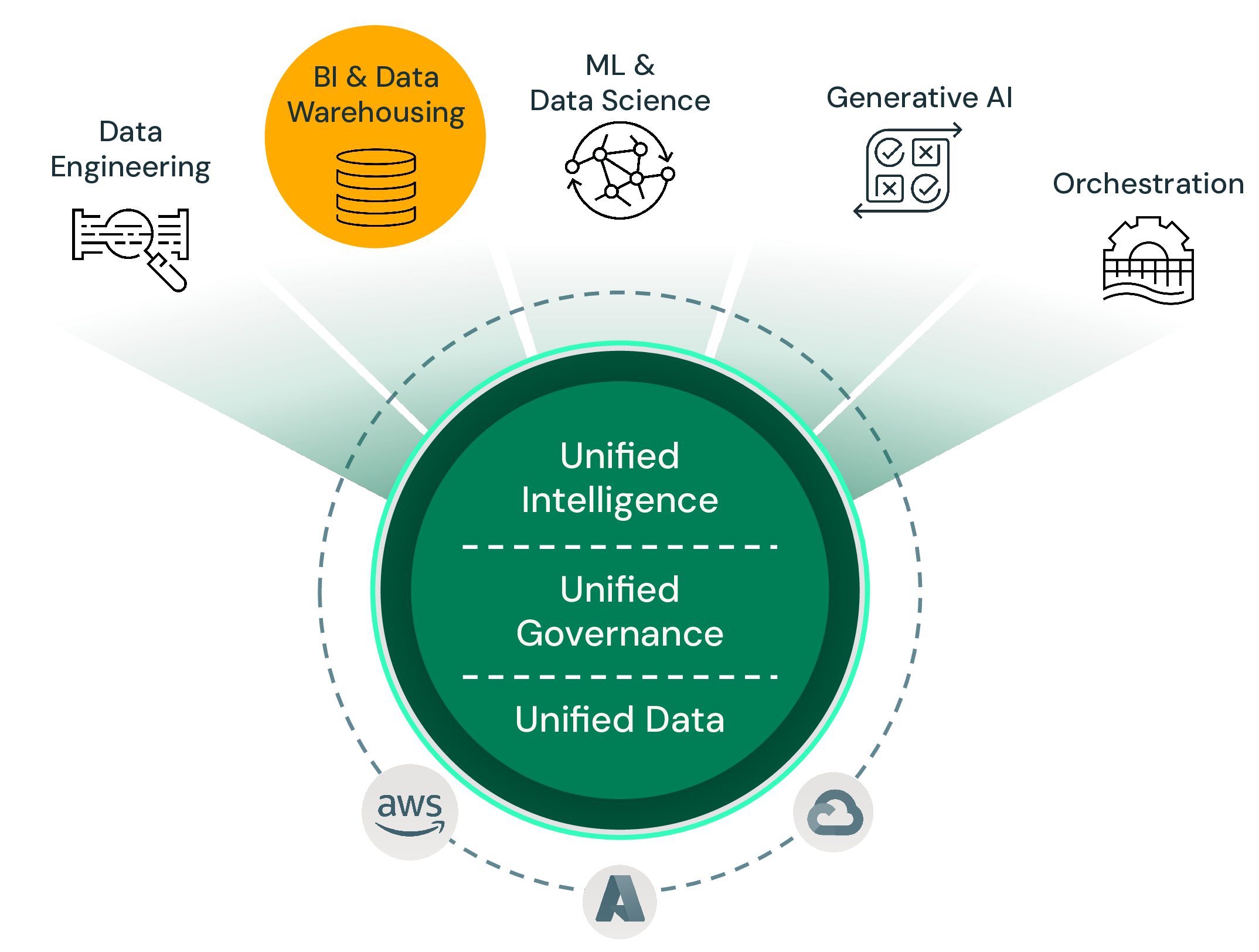

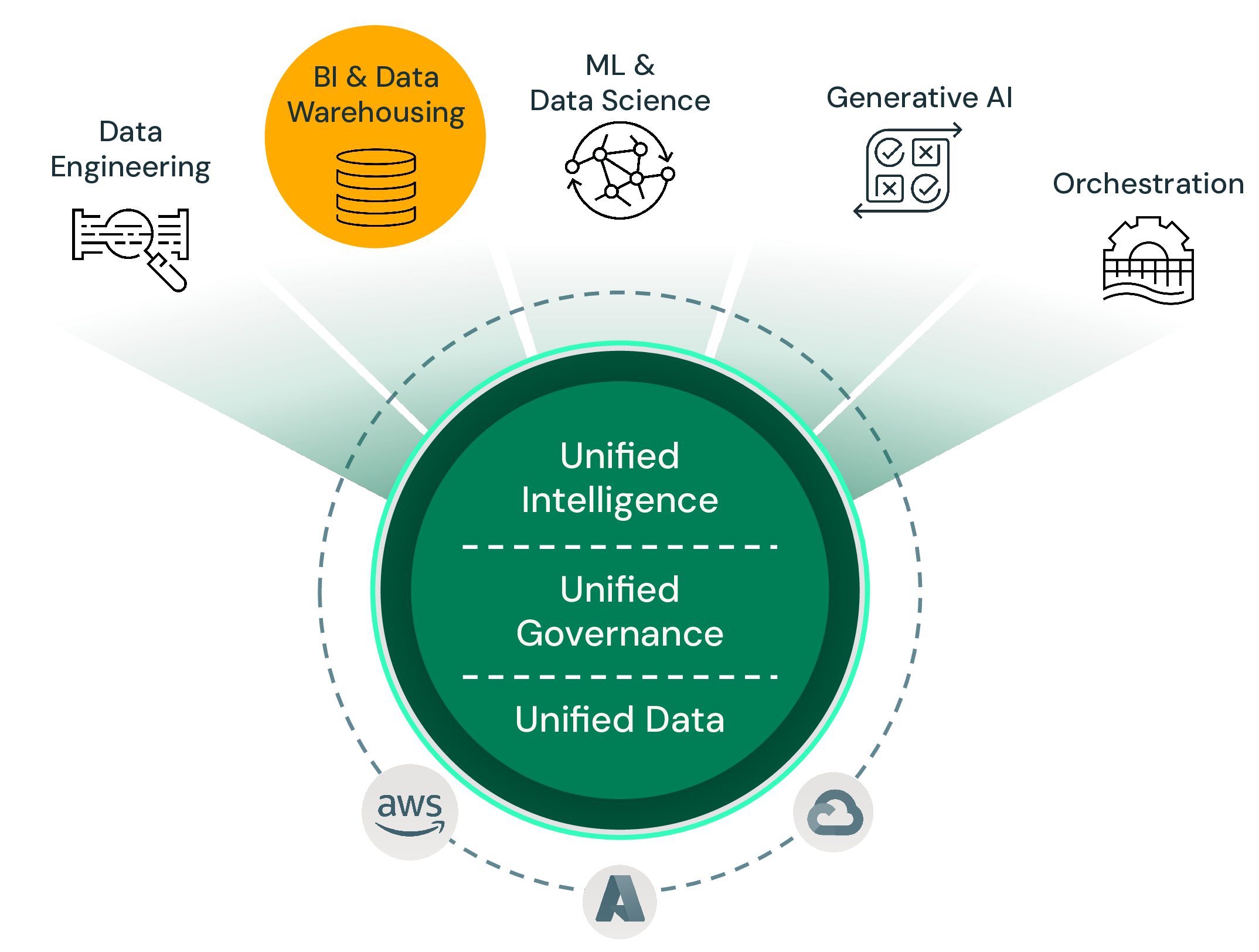

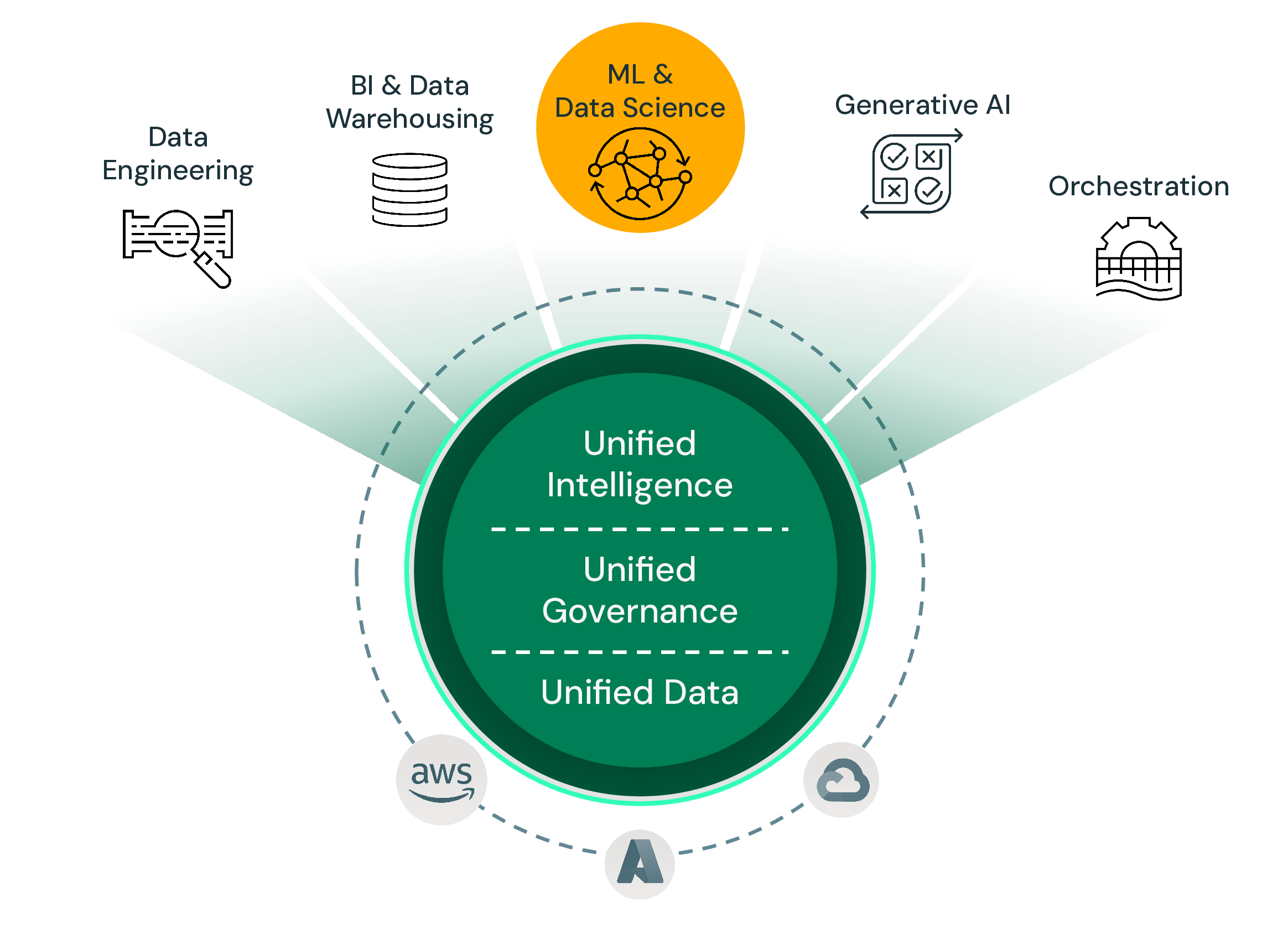

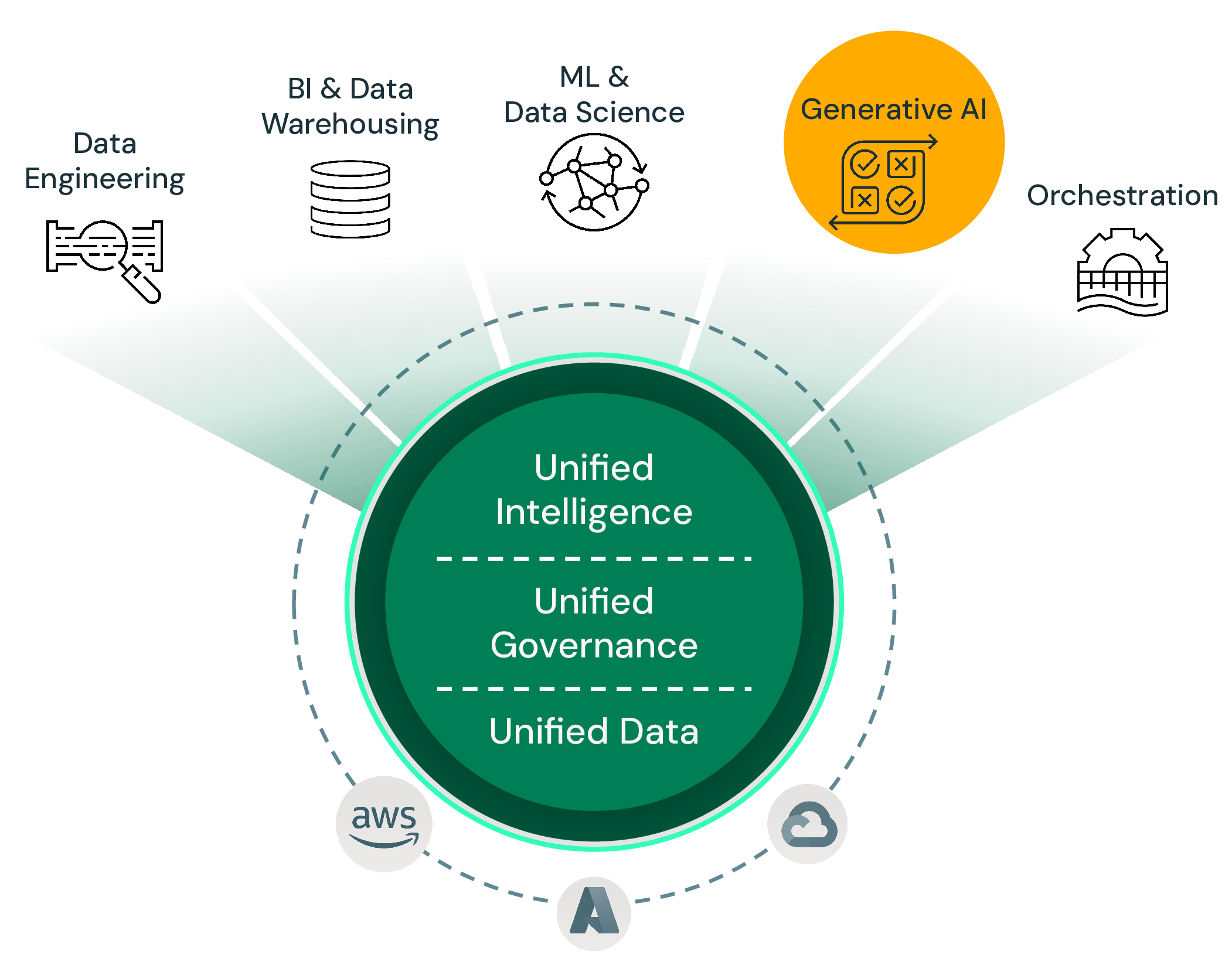

What is The Databricks Intelligence Data Platform for IoT & Manufacturing?

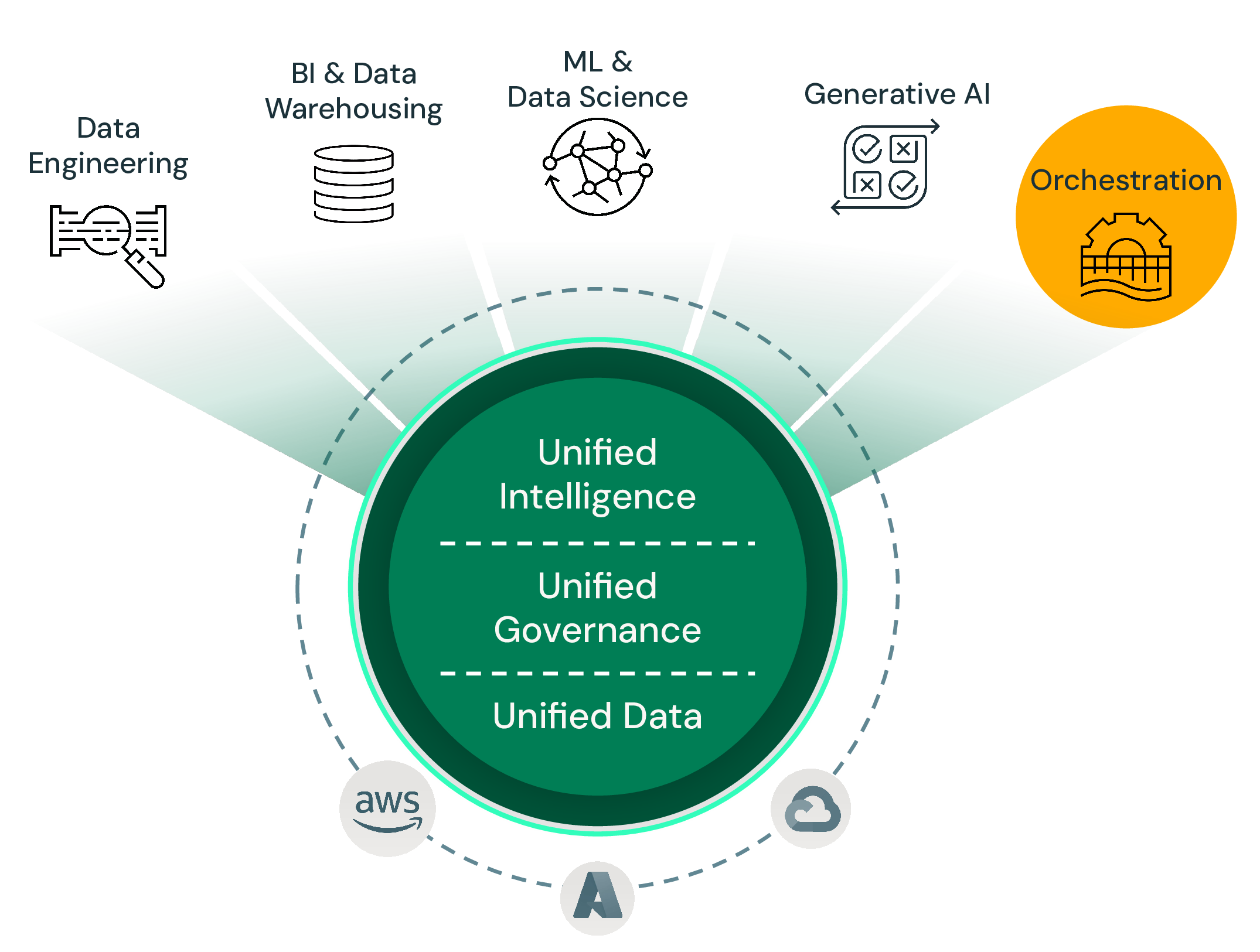

The Databricks Data Intelligence Platform for Manufacturing unlocks the full value of manufacturing data, enabling intelligent networks, enhanced customer experiences, smarter products, and sustainable businesses. It empowers data teams with unmatched scalability, real-time insights, and innovative capabilities across all data types and sources. Manufacturers benefit from reduced costs, increased productivity, improved customer responsiveness, and accelerated innovation. The platform integrates diverse data sources with top-tier AI processing and offers manufacturing-specific Solution Accelerators and partners for powerful real-time decision-making.

**Intelligent**

Databricks combines generative AI with the unification benefits of a lakehouse to power a Data Intelligence Engine that understands the unique semantics of your data. This allows the Databricks Platform to automatically optimize performance and manage infrastructure in ways unique to your business.

**Simple** Natural language substantially simplifies the user experience on Databricks. The Data Intelligence Engine understands your organization’s language, so search and discovery of new data is as easy as asking a question like you would to a coworker. Additionally, developing new data and applications is accelerated through natural language assistance to write code, remediate errors and find answers.

**Private** Data and AI applications require strong governance and security, especially with the advent of generative AI. Databricks provides an end-to-end MLOps and AI development solution that’s built upon our unified approach to governance and security. You’re able to pursue all your AI initiatives — from using APIs like OpenAI to custom-built models — without compromising data privacy and IP control.

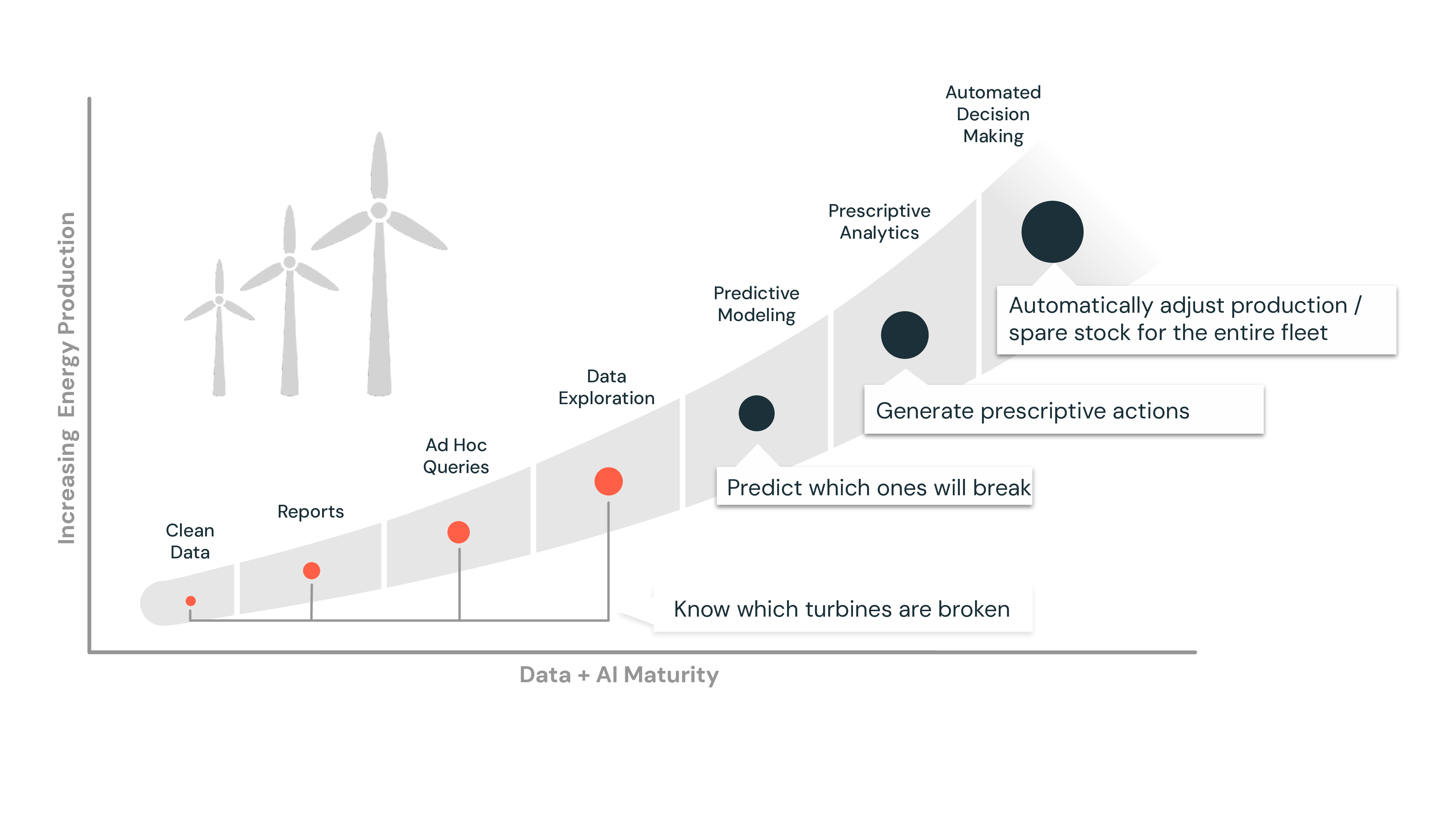

Wind Turbine Prescriptive Maintenance with the Databricks Intelligence Data Platform: Bringing Generative AI to Predictive Maintenance

Being able to collect and centralize industrial equipment information in real time is critical in the energy space. When a wind turbine is down, it is not generating power which leads to poor customer service and lost revenue. Data is the key to unlock critical capabilities such as energy optimization, anomaly detection, and/or predictive maintenance. The rapid rise of Generative AI provides the opportunity to revolutionize maintenance by not only predicting when equipment is likely to fail, but also generating prescriptive maintenance actions to prevent failurs before they arise and optimize equipment performance. This enables a shift from predictive to prescriptive maintenance.

Prescriptive maintenance examples include:

- Analyzing equipment IoT sensor data in real time

- Predict mechanical failure in an energy pipeline

- Diagnose root causes for predicted failure and generate prescriptive actions

- Detect abnormal behavior in a production line

- Optimize supply chain of parts and staging for scheduled maintenance and repairs

What we'll build

In this demo, we'll build an end-to-end IoT platform to collect real-time data from multiple sources.

We'll create a predictive model to forecast wind turbine failures and use it to generate maintenance work orders, reducing downtime and increasing Overall Equipment Effectiveness (OEE).

Additionally, we'll develop a dashboard for the Turbine Maintenance team to monitor turbines, identify those at risk, and review maintenance work orders, ensuring we meet our productivity goals.

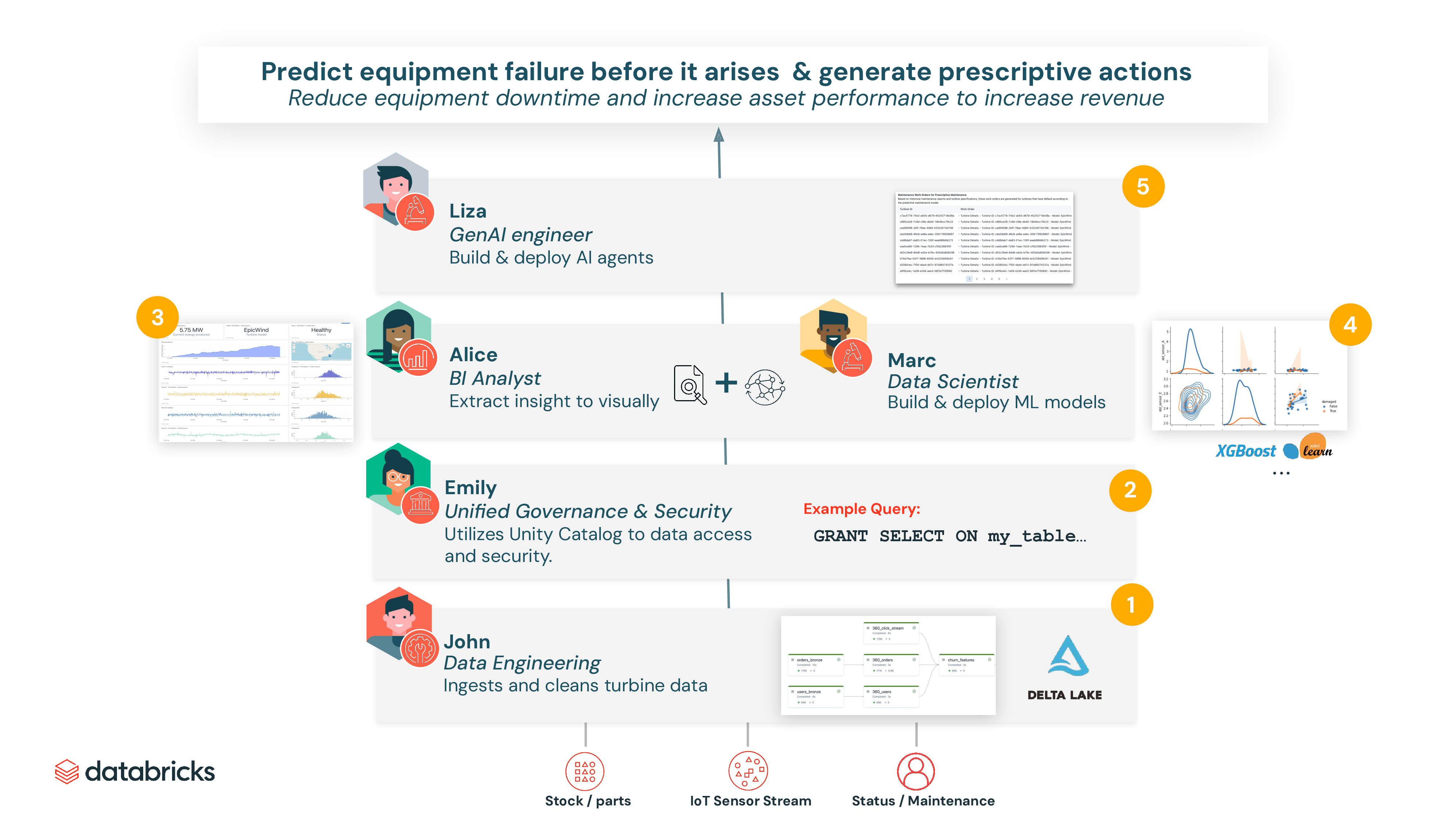

At a very high level, this is the flow we will implement:

1. Ingest and create our IoT database and tables which are easily queriable via SQL.

2. Secure data and grant read access to the Data Analyst and Data Science teams.

3. Run BI queries to analyze existing failures.

4. Build ML model to monitor our wind turbine farm & trigger predictive maintenance operations.

5. Generate maintenance work orders for field service engineers utilizing Generative AI.

Being able to predict which wind turbine will potentially fail is only the first step to increase our wind turbine farm efficiency. Once we're able to build a model predicting potential maintenance, we can dynamically adapt our spare part stock, generate work orders for field service engineers and even automatically dispatch maintenance team with the proper equipment.

Our dataset

To simplify this demo, we'll consider that an external system is periodically sending data into our blob storage (S3/ADLS/GCS):

- Turbine data *(location, model, identifier etc)*

- Wind turbine sensors, every sec *(energy produced, vibration, typically in streaming)*

- Turbine status over time, labelled by our analyst team, and historical maintenance reports *(historical data to train on model on and to index into vector database)*

*Note that at a technical level, our data could come from any source. Databricks can ingest data from any system (SalesForce, Fivetran, queuing message like kafka, blob storage, SQL & NoSQL databases...).*

Let's see how this data can be used within the Data Intelligence Platform to analyze sensor data, trigger predictive maintenance and generate work orders.

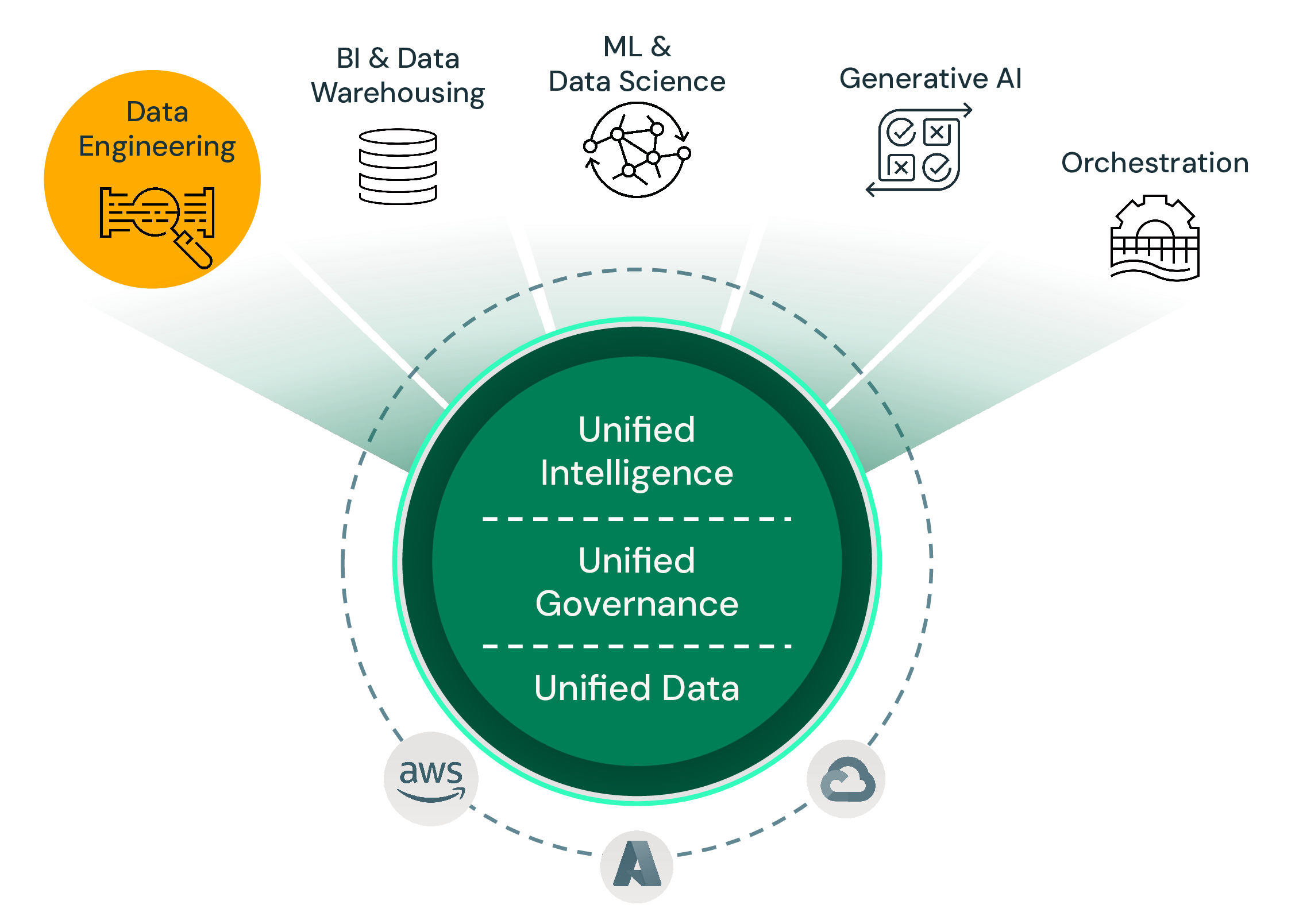

1/ Ingesting and Preparing the Data (Data Engineering)

Our first step is to ingest and clean the raw data we received so that our Data Analyst team can start running analysis on top of it.

Delta Lake

All the tables we'll create in the Lakehouse will be stored as Delta Lake tables. [Delta Lake](https://delta.io) is an open storage framework for reliability and performance.

It provides many functionalities such as *(ACID Transaction, DELETE/UPDATE/MERGE, Clone zero copy, Change data Capture...)*

For more details on Delta Lake, run `dbdemos.install('delta-lake')`

Simplify ingestion with Spark Declarative Pipelines

Databricks simplifies data ingestion and transformation with Spark Declarative Pipelines by allowing SQL users to create advanced pipelines via batch or streaming. Databricks also simplifies pipeline deployment, testing, and tracking data quality which reduces operational complexity, so that you can focus on the needs of the business.

Open the Wind Turbine

Spark Declarative Pipelines pipeline or the [SQL notebook]($./01-Data-ingestion/01.1-SDP-SQL/01.1-SDP-Wind-Turbine-SQL) *(Alternatives: [Spark Declarative Pipelines Python]($./01-Data-ingestion/01.2-SDP-python/01.1-SDP-Wind-Turbine-Python) - [plain Delta+Spark version]($./01-Data-ingestion/plain-spark-delta-pipeline/01.5-Delta-pipeline-spark-iot-turbine))*.

For more details on Spark Declarative Pipelines: `dbdemos.install('pipeline-bike')` or `dbdemos.install('declarative-pipeline-cdc')`

2/ Securing Data & Governance (Unity Catalog)

Now that our first tables have been created, we need to grant our Data Analyst team READ access to be able to start analyzing our turbine failure information.

Let's see how Unity Catalog provides Security & governance across our data assets and includes data lineage and audit logs.

Note that Unity Catalog integrates Delta Sharing, an open protocol to share your data with any external organization, regardless of their software or data stack. For more details: `dbdemos.install('delta-sharing-airlines')`

Open [Unity Catalog notebook]($./02-Data-governance/02-UC-data-governance-security-iot-turbine) to see how to setup ACL and explore lineage with the Data Explorer.

3/ Analysing Failures (BI / Data warehousing / SQL)

Our datasets are now properly ingested, secured, are of high quality and easily discoverable within our organization.

Data Analysts are now ready to run BI interactive queries which are low latency & high throughput. They can choose to either create a new compute cluster, use a shared cluster, or for even faster response times, use Databricks Serverless Datawarehouses which provide instant stop & start.

Let's see how Data Warehousing is done using Databricks! We will look at our built-in dashboards as Databricks provides a complete data platform from ingest to analysis but also provides to integrations with many popular BI tools such as PowerBI, Tableau and others!

Open the [Datawarehousing notebook]($./03-BI-data-warehousing/03-BI-Datawarehousing-iot-turbine) to start running your BI queries or access or directly open the Turbine analysis AI/BI dashboard

4/ Predict Failure with Data Science & Auto-ML

Being able to run analysis on our historical data provided the team with a lot of insights to drive our business. We can now better understand the impact of downtime and see which turbines are currently down in our near real-time dashboard.

However, knowing what turbines have failed isn't enough. We now need to take it to the next level and build a predictive model to detect potential failures before they happen and increase uptime and minimize costs.

This is where the Lakehouse value comes in. Within the same platform, anyone can start building an ML model to predict the failures using traditional ML development or with our low code solution AutoML.

Let's see how to train an ML model within 1 click with the [04.1-automl-iot-turbine-predictive-maintenance]($./04-Data-Science-ML/04.1-automl-iot-turbine-predictive-maintenance)

5/ Generate Maintenance Work Orders with Generative AI

The rise of Generative AI enables a shift from Predictive to Prescriptive Maintenance ML Models. By going from ML models to agent systems, we can now leverage the predictive model as one of the many components of the AI system. This opens up a whole lot of new opportunities for automation and efficiency gains, which will further increase uptime and minimize costs.

Databricks offers a a set of tools to help developers build, deploy and evaluate production-quality AI agents like Retrievel Augmented Generation (RAG) applications, including a vector database, model serving endpoints, governance, monitoring and evaluation capabilties.

_Disclaimer: if your organization doesn't allow (yet) the use of Databricks Vector Search and/or Model Serving, you can skip this section._

Let's create our first agent system with the [05.1-ai-tools-iot-turbine-prescriptive-maintenance]($./05-Generative-AI/05.1-ai-tools-iot-turbine-prescriptive-maintenance)

Automate Action to Reduce Turbine Outage Based on Predictions

We now have an end-to-end data pipeline analyzing sensor data, detecting potential failures and generating prescriptive actions based on past maintenance reports to prevent failures before they even arise. With that, we can now easily trigger follow-up actions to reduce outages such as:

- Schedule maintenance based on teams availability and fault gravity

- Stage parts and supplies accordingly to predictive maintenance operations, while keeping a low stock on hand

- Track our predictive maintenance model efficiency by measuring its efficiency and ROI

*Note: These actions are out of the scope of this demo and simply leverage the Predictive maintenance result from our ML model.*

Open the Prescriptive maintenance AI/BI dashboard to have a complete view of your wind turbine farm, including potential faulty turbines, work orders and actions to remedy that.

6/ Deploying and Orchestrating the Full Workflow

While our data pipeline is almost completed, we're missing one last step: orchestrating the full workflow in production.

With Databricks Lakehouse, there is no need to utilize an external orchestrator to run your job. Databricks Workflows simplifies all your jobs, with advanced alerting, monitoring, branching options etc.

Open the [workflow and orchestration notebook]($./06-Workflow-orchestration/06-Workflow-orchestration-iot-turbine) to schedule our pipeline (data ingetion, model re-training etc)

Conclusion

We demonstrated how to implement an end-to-end pipeline with the Lakehouse, using a single, unified and secured platform. We saw:

- Data Ingestion

- Data Analysis / DW / BI

- Data Science / ML

- Generative AI

- Workflow & Orchestration

And as a result, our business analysis team was able to build a system to not only understand failures better but also forecast future failures and let the maintenance team take action accordingly.

*This was only an introduction to the Databricks Platform. For more details, contact your account team and explore more demos with `dbdemos.list()`!*