Democratizing the magic of ChatGPT with open models and Databricks Lakehouse

Large Language Models produce some amazing results, chatting and answering questions with seeming intelligence.

But how can you get LLMs to answer questions about _your_ specific datasets? Imagine answering questions based on your company's knowledge base, docs or Slack chats.

The good news is that this is easy to build on Databricks, leveraging open-source tooling and open LLMs.

Databricks Dolly: World's First Truly Open Instruction-Tuned LLM

As of now, most of the state of the art models comes with restrictive license and can't easily be used for commercial purpose. This is because they were trained or fine-tuned using non-open datasets.

To solve this challenge, Databricks released Dolly, the first truly open LLM. Because Dolly was fine tuned using `databricks-dolly-15k` (15,000 high-quality human-generated prompt / response pairs specifically designed for instruction tuning large language models), it can be used as starting point to create your own commercial model.

Building a gardening chat bot to answer our questions

In this demo, we'll be building a chat bot to based on Dolly. As a gardening shop, we want to add a bot in our application to answer our customer questions and give them recommendations on how they can care for their plants.

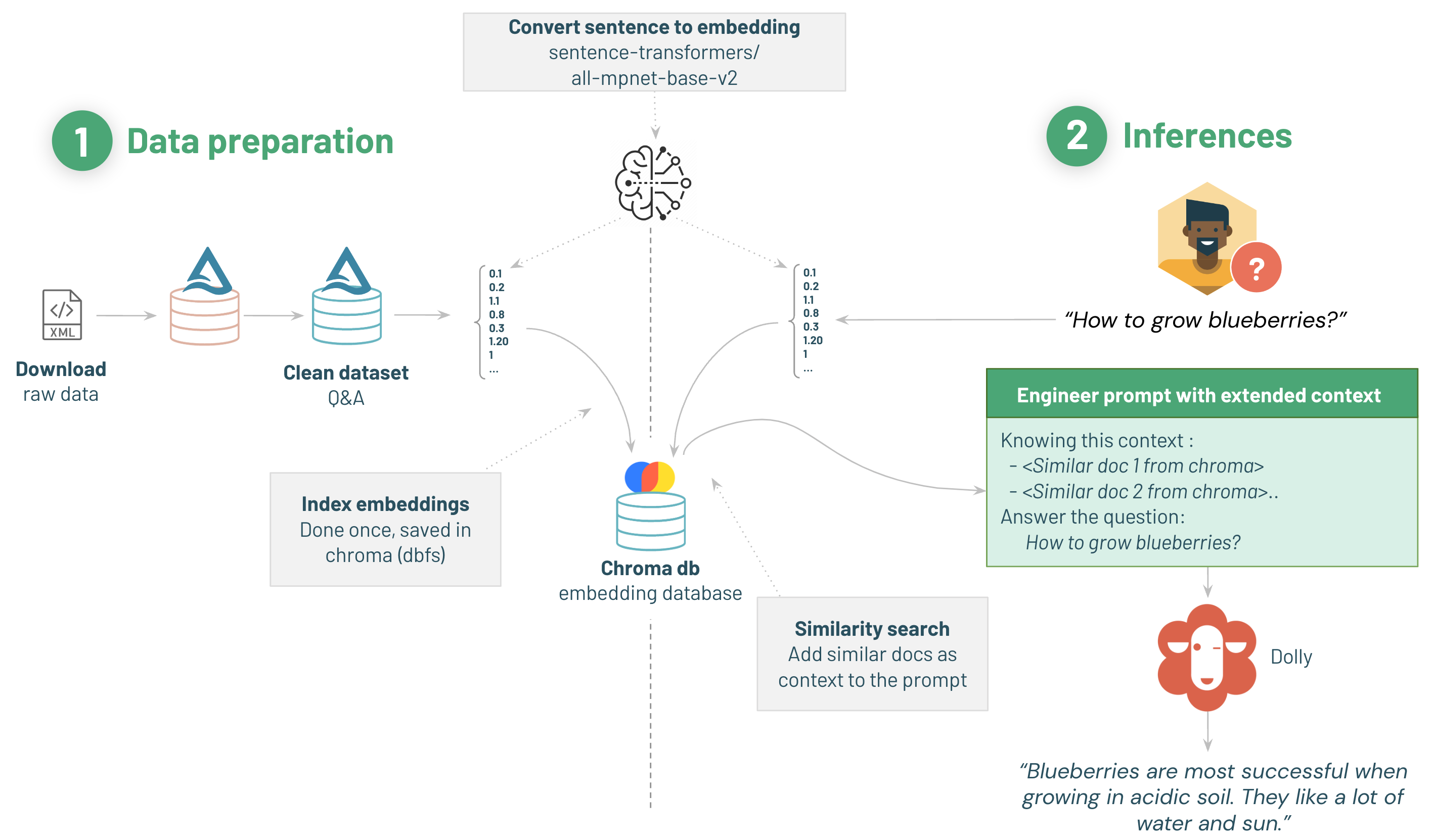

We will split this demo in 2 sections:

- 1/ **Data preparation**: ingest and clean our Q&A dataset, transforming them as embeddings in a vector database.

- 2/ **Q&A inference**: leverage Dolly to answer our query, leveraging our Q&A as extra context for Dolly. This is also known as Prompt Engineering.

1/ Data Preparation & Vector database creation with Databricks Lakehouse

The most difficult part in our chat bot creation is the data collection, preparation and cleaning.

Databricks Lakehouse makes this simple! Leveraging Delta Lake, Delta Live Tables and Databricks specialized execution engine, you can build simple data pipeline and drastically reduce your pipeline TCO.

Open the [02-Data-preparation]($./02-Data-preparation) notebook to ingest our data & start building our vector database.

2/ Prompt engineering for Question & Answers

Now that our specialized dataset is available, we can start leveraging dolly to answer our questions.

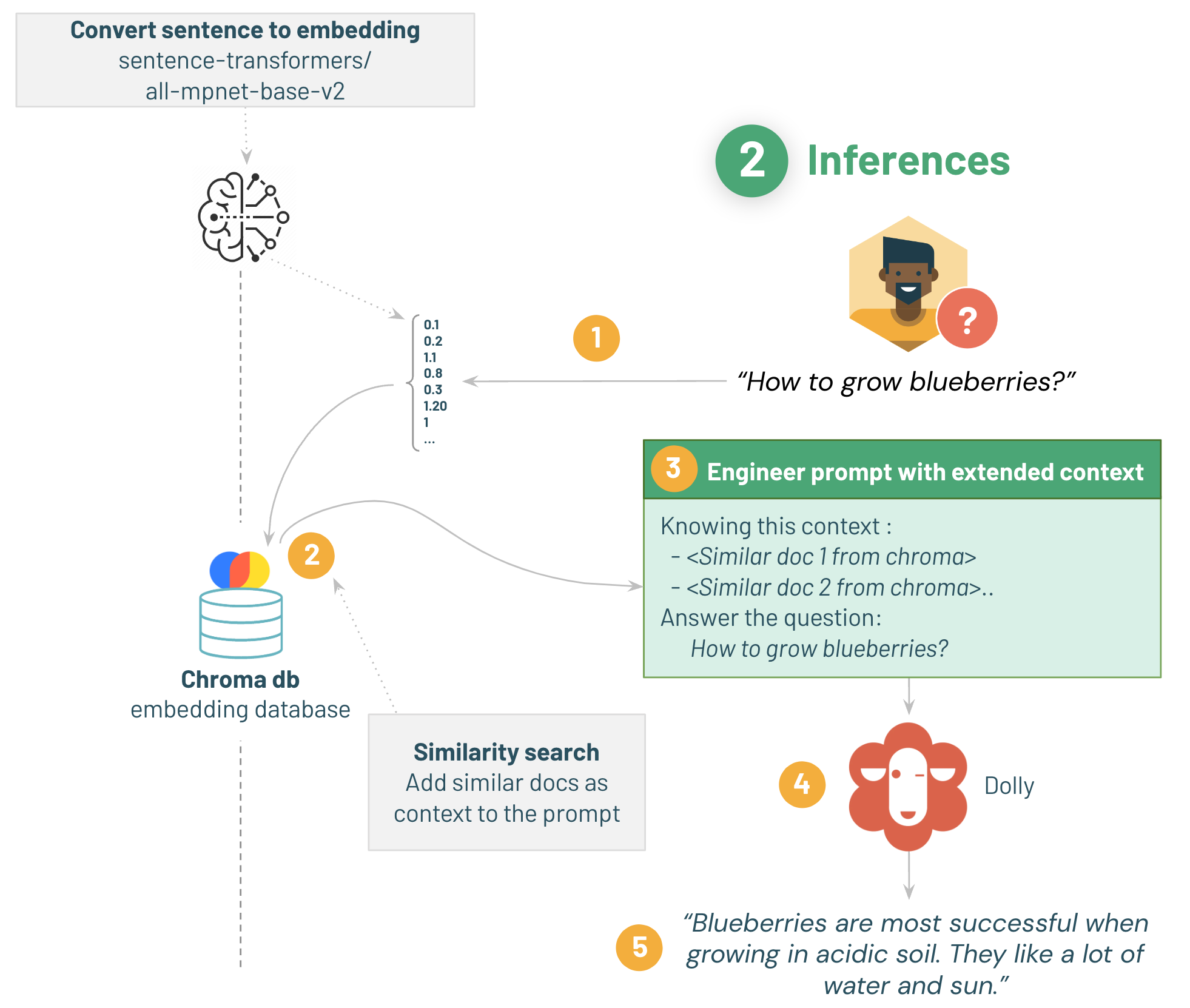

We will start with a simple Question / Answer process:

* Customer asks a question

* We fetch similar content from our Q&A dataset

* Engineer a prompt containing the content

* Send the content to Dolly

* Display the answer to our customer

Open the next [03-Q&A-prompt-engineering-for-dolly]($./03-Q&A-prompt-engineering-for-dolly) notebook to learn how to improve your prompt using `langchain` to send your questions to Dolly & get great answers.

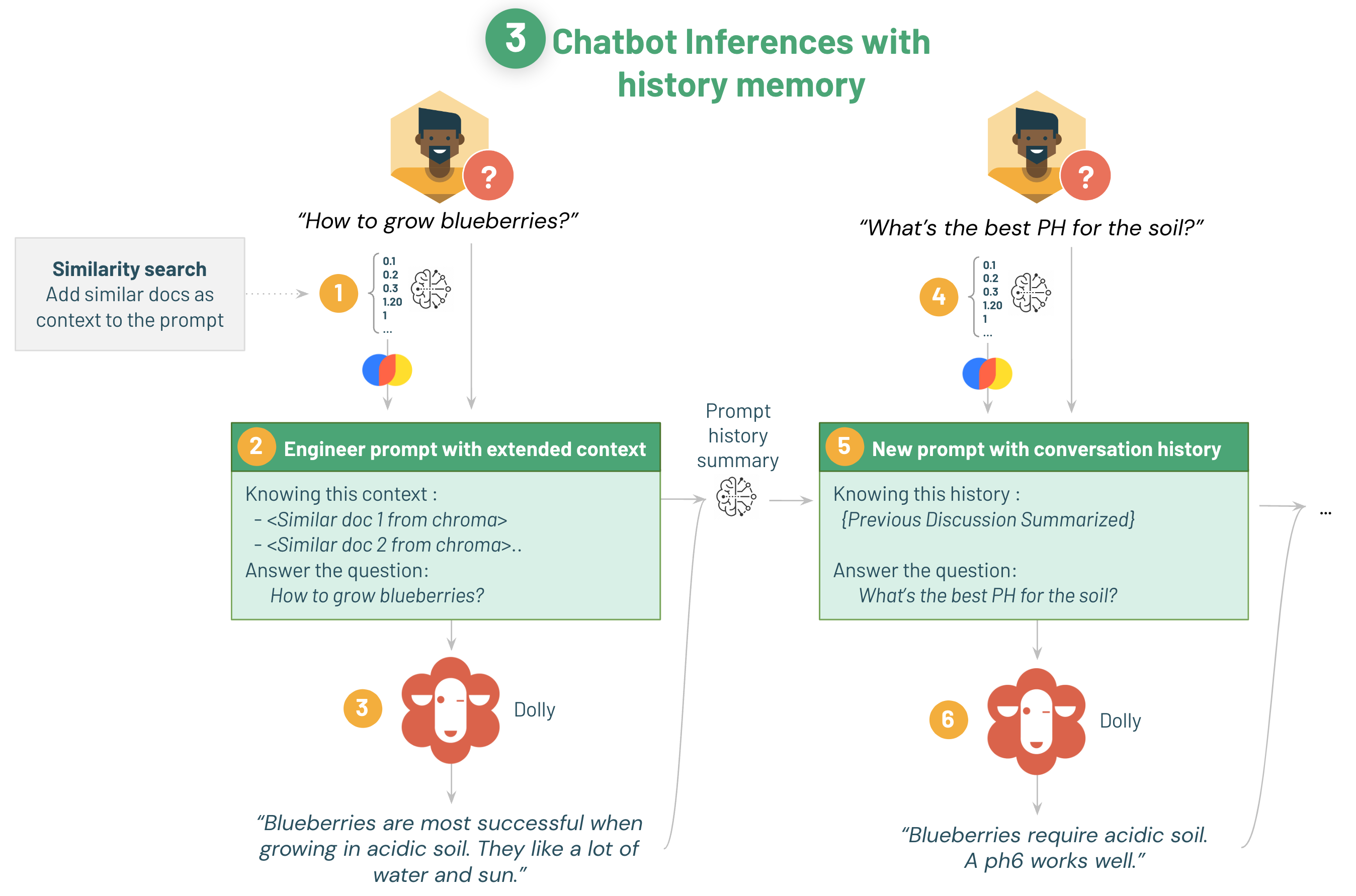

3/ Prompt engineering for a chat bot

Let's increase our bot capabilities to make him behave as a chat bot.

In this example, we'll improve our bots by allowing chaining multiple question / answers.

We'll keep the previous behavior (adding context from our Q&A dataset), and on top of that make sure we add a memory between each question.

Let's leverage `langchain` `ConversationSummaryMemory` object with an intermediate model to summarize our ongoing discussion and keep our prompt under control!

Open the next [04-chat-bot-prompt-engineering-dolly]($./04-chat-bot-prompt-engineering-dolly) notebook to build your own chat bot!

4/ Fine tuning dolly

If you have a clean dataset and want to specialized the way Dolly answers, you can fine tune the LLM for your own requirements!

This is a more advanced requirement, and could be useful to specialize Dolly to answer with a specific chat format and a formatted type of dataset, example:

```

IA: I'm a gardening bot, how can I help ?

Human: How to grow bluberries?

IA: Blueberries are most successful when growing in acidic soil. They like a lot of water and sun.

Human: What's the ideal soil PH?

IA: to grow your blublerries, it's best to have a soil with a PH of 6, slightly acid.

...

```

As Dolly is under active development, we recommend exploring the official [Dolly repository](https://github.com/databrickslabs/dolly) for up-to-date fine-tuning examples!