Scale pandas API with Databricks runtime as backend

Using Databricks, Data Scientist don't have to learn a new API to analyse data and deploy new model in production

* If you model is small and fit in a single node, you can use a Single Node cluster with pandas directly

* If your data grow, no need to re-write the code. Just switch to pandas on spark and your cluster will parallelize your compute out of the box.

Scalability beyond a single machine

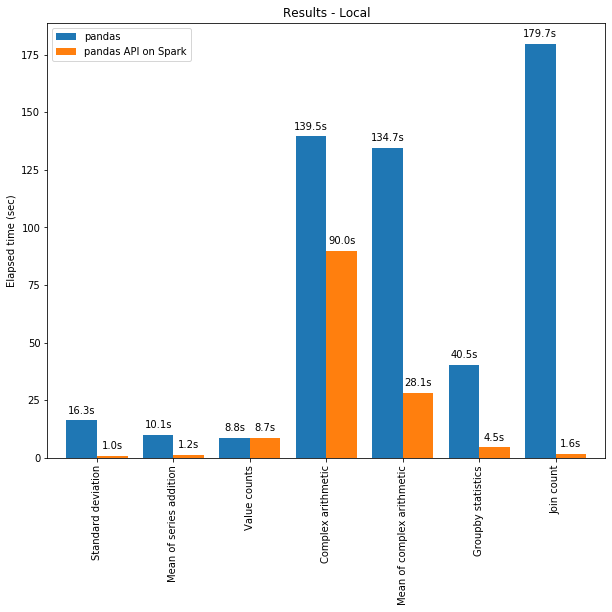

One of the known limitations in pandas is that it does not scale with your data volume linearly due to single-machine processing. For example, pandas fails with out-of-memory if it attempts to read a dataset that is larger than the memory available in a single machine.

Pandas API on Spark overcomes the limitation, enabling users to work with large datasets by leveraging Spark while using Pandas API!

**As result, Data Scientists can access dataset in Unity Catalog with simple SQL or spark command, and then switch to the API they know (pandas) best without having to worry about the table size and scalability!**

*Note: Starting with spark 3.2, pandas API are directly part of spark runtime, no need to import external library!*

Loading data from files at scale using pandas API

Pandas APIs are now fully available to explore our dataset

Existing pandas code will now work and scale out of the box:

Running SQL on top of pandas dataframe

Pandas dataframe on spark can be queried using plain SQL, allowing a perfect match between pandas python API and SQL usage

Pandas visualisation

Pandas api on spark use plotly with interactive charts. Use the standard `.plot` on a pandas dataframe to get insights:

That's it! you're now ready to use all the capabilities of Databricks while staying on the API you know the best!

Going further with koalas

Want to know more? Check the [koalas documentation](https://koalas.readthedocs.io/en/latest/) and the integration with [spark 3.2](https://databricks.com/fr/blog/2021/10/04/pandas-api-on-upcoming-apache-spark-3-2.html)