1/ Process and analyse text with Built In Databricks SQL AI functions

Databricks SQL provides [built-in GenAI capabilities](https://docs.databricks.com/en/large-language-models/ai-functions.html), letting you perform adhoc operation, leveraging state of the art LLM, optimized for these tasks.

Discover how to leverage functions such as `ai_analyze_sentiment`, `ai_classify` or `ai_gen` with Databricks SQL. In addition, Databricks AI Functions are fully serverless which delivers 10x faster performance with no requirement of setting up inference endpoint and manage infrastructure.

Open the [01-Builtin-SQL-AI-Functions]($./01-Builtin-SQL-AI-Functions) to get started with Databricks SQL AI builtin functions.

2/ Going Further: Actioning Customer Reviews at Scale with Databricks SQL AI Functions and custom Model Endpoint (LLM or other)

Databricks builtin AI functions are powerful and let you quickly achieve many tasks with text.

However, you might sometime require more fine-grained control and select wich Foundation Model you want to call (Mistral, Llama, Dbrx, OpenAI or one of your own fine-tuning model), passing specific instruction.

You might also need to query your own fine-tuned LLMs, providing a solution to leverage small models to perform extremely well on specialized tasks, at a lower cost.

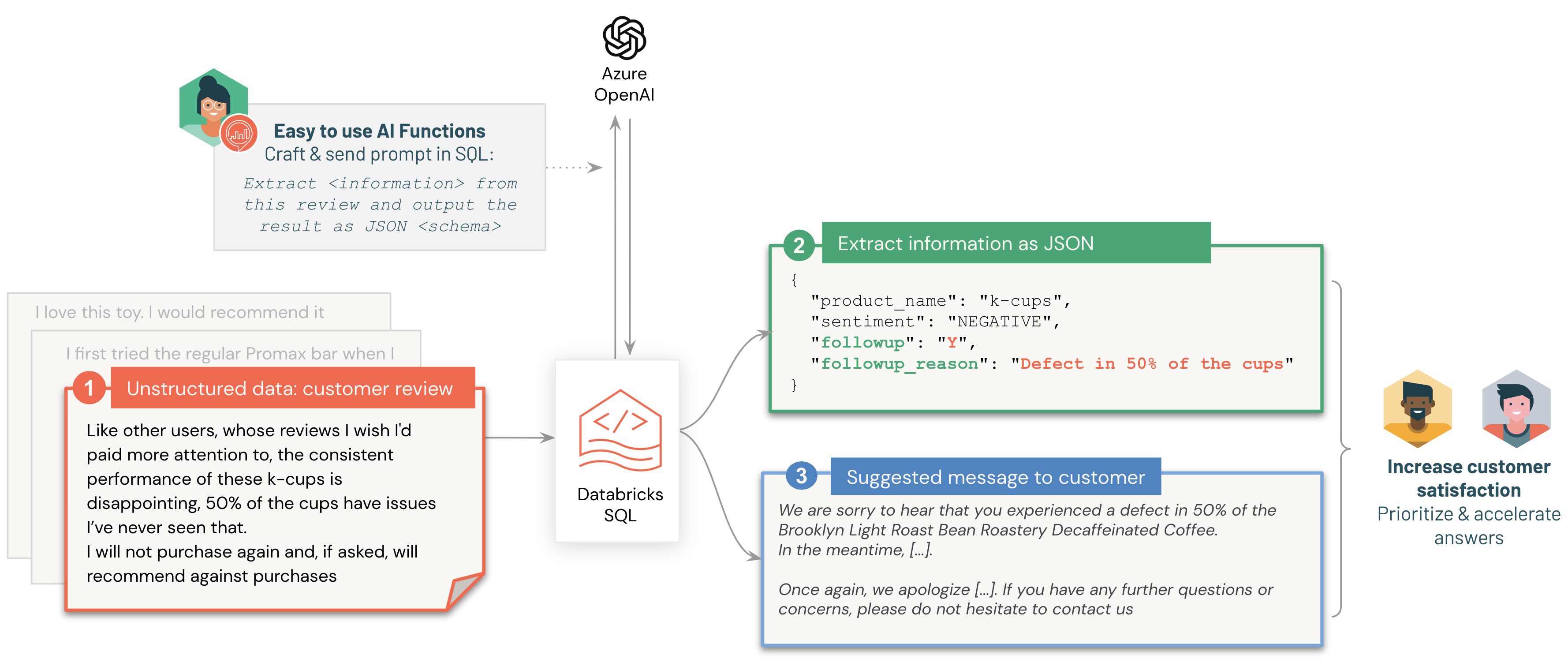

Use-case: Increasing customer satisfaction and churn reduction with automatic reviews analysis

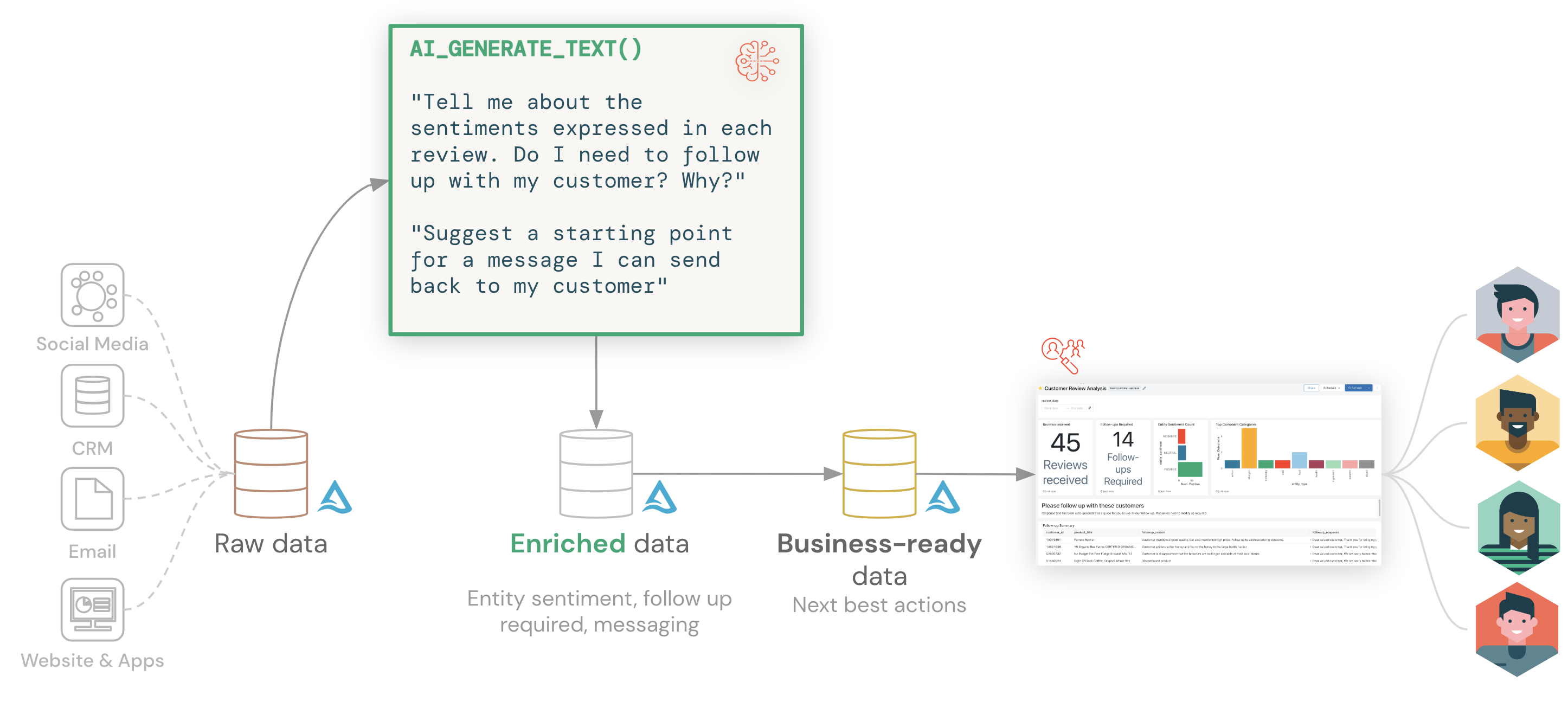

In this demo, we'll build a data pipeline that takes customer reviews as text, analyze them by leveraging LLMs (Databricks Foundation Model Llama or Mistral).

We'll even provide recommendations for next best actions to our customer service team - i.e. whether a customer requires follow-up, and a sample message to follow-up with.

For each review, we:

- Extract information such as sentiment and whether a response is required back to the customer

- Generate a response mentioning alternative products that may satisfy the customer

2.1/ Introducing Databricks AI_QUERY() function: generating dataset

Databricks provide a `ai_query()` function that you can use to call any model endpoint.

This lets you wrap functions around any Model Serving Endpoint. Your endpoint can then implement any logic (a Foundation Model, a more advanced Chain Of Thought, or a classic ML model).

AI Functions abstracts away the technical complexities of calling LLMs, enabling analysts and data scientists to start using these models without worrying about the underlying infrastructure.

In this demo, we use one of our [Foundation models](https://docs.databricks.com/en/machine-learning/foundation-models/index.html), [Llama 3.3 70B Instruct](https://www.databricks.com/blog/making-ai-more-accessible-80-cost-savings-meta-llama-33-databricks) as our LLM that will be used by AI_QUERY() to generate fake reviews.

Open the next Notebook to generate some sample data for our demo: [02-Generate-fake-data-with-AI-functions]($./02-Generate-fake-data-with-AI-functions)

2.2/ Building our SQL pipeline with our LLM to extract review sentiments

We are now ready to use create our full data pipeline:

Open the [03-automated-product-review-and-answer]($./03-automated-product-review-and-answer) to process our text using SQL and automate our review answer!

Optional: If you cannot access a foundation model, follow the instructions in the notebook [04-Extra-setup-external-model-OpenAI]($./04-Extra-setup-external-model-OpenAI) to use an OpenAI model instead.

Further Reading and Resources

- [Documentation](https://docs.databricks.com/en/large-language-models/ai-query-external-model.html)

- [Introducing AI Functions: Integrating Large Language Models with Databricks SQL](https://www.databricks.com/blog/2023/04/18/introducing-ai-functions-integrating-large-language-models-databricks-sql.html)