Test models with the Databricks AI Playground#

The AI Playground is an environment for testing and comparing LLMs in your Databricks workspace. It is one of the easiest ways to start experimenting with the models available through the Foundation Model API.

Getting Started#

To get started, navigate to “Playground” under “Machine Learning” in the left navigation menu.

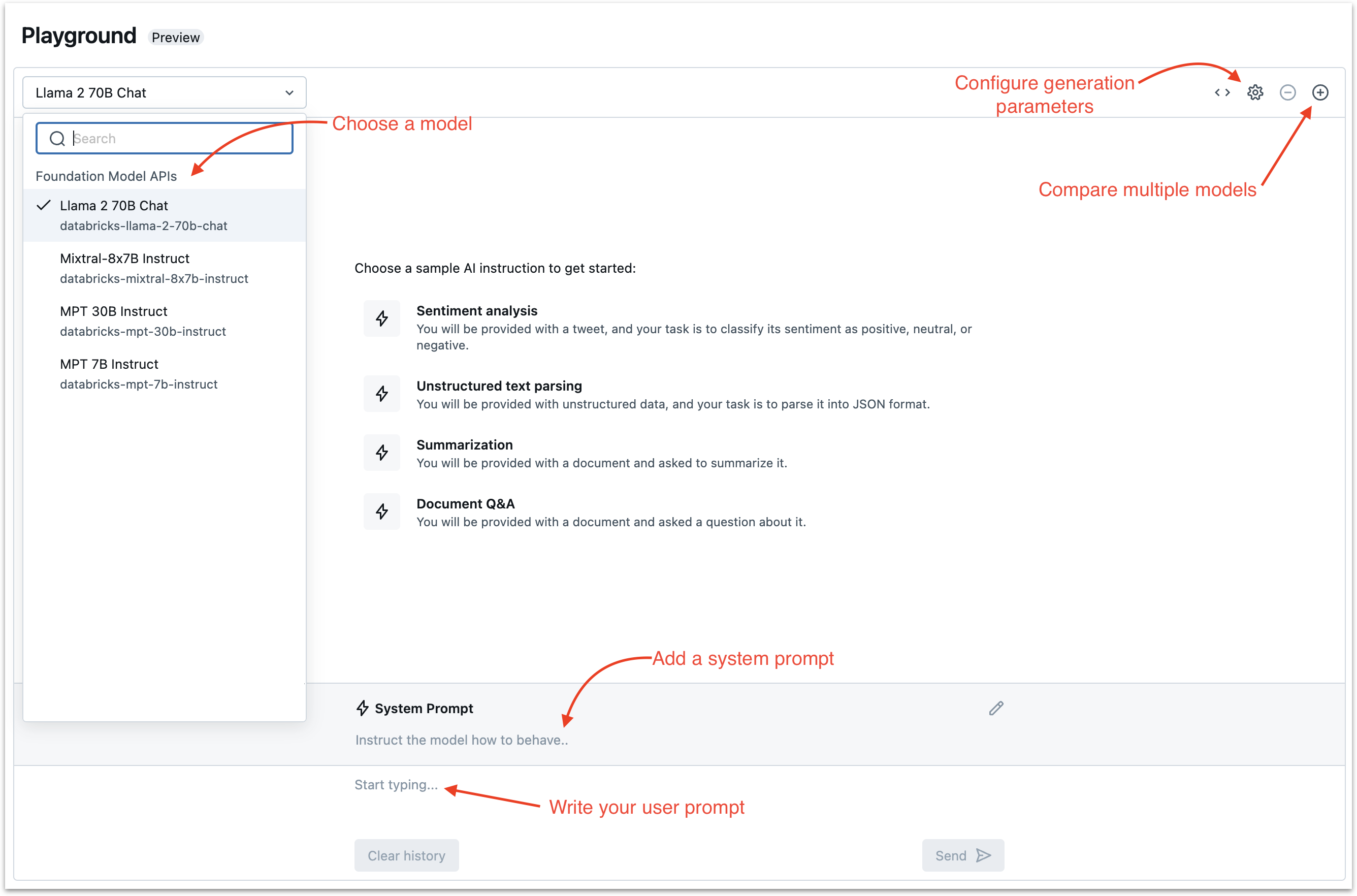

This will lead you to the playground interface, which offers an intuitive interface for experimenting with models. The image below offers a brief tour of the playground interface.

Preconfigured Examples#

As you can see in the image above, the AI playground features a selection of preconfigured examples. These are very useful if you are just starting to learn about LLMs or want a quick example of how the AI playground works.

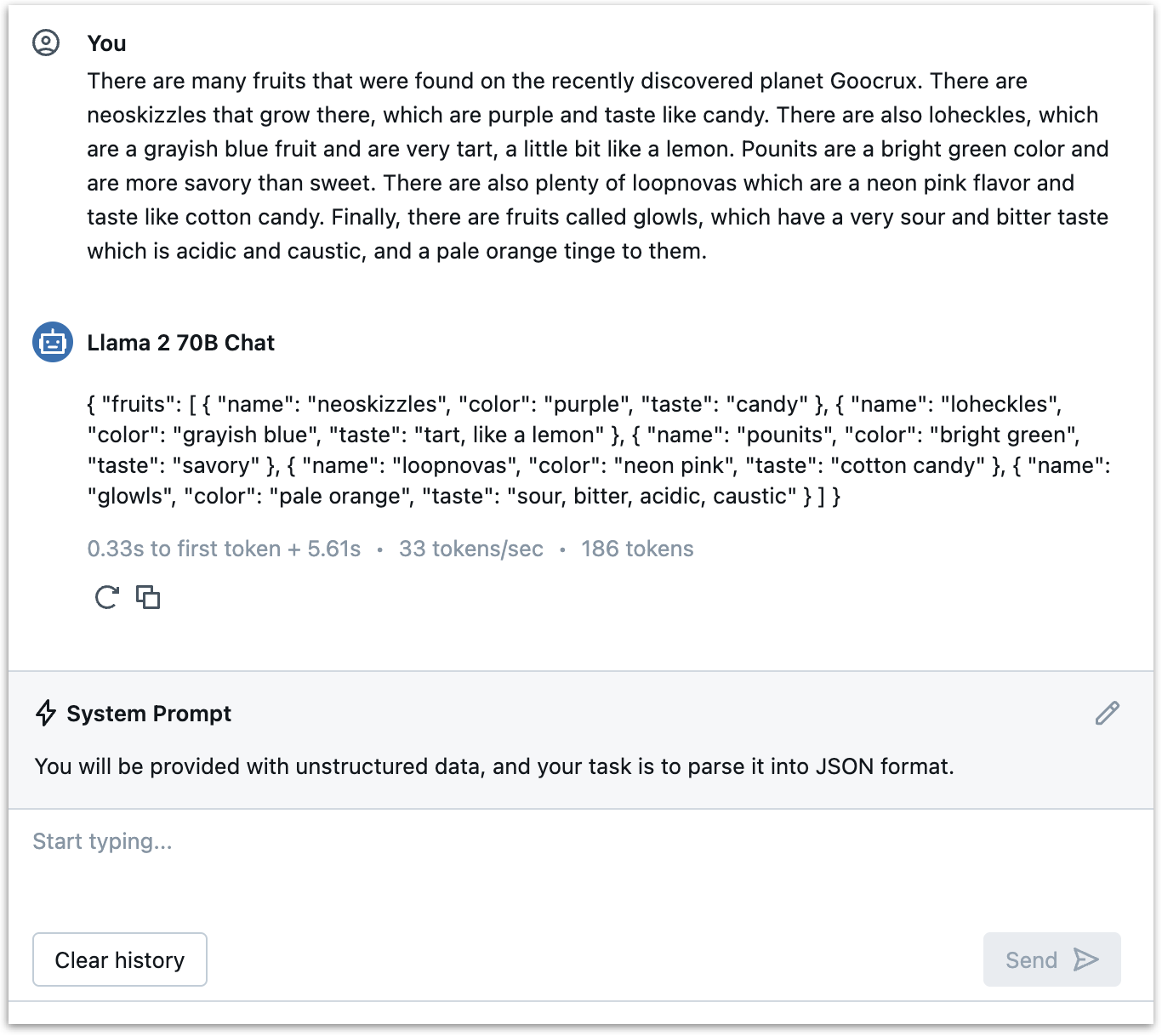

For example, the “Unstructured text parsing” section features an example of parsing an input text into JSON.

Comparing Models#

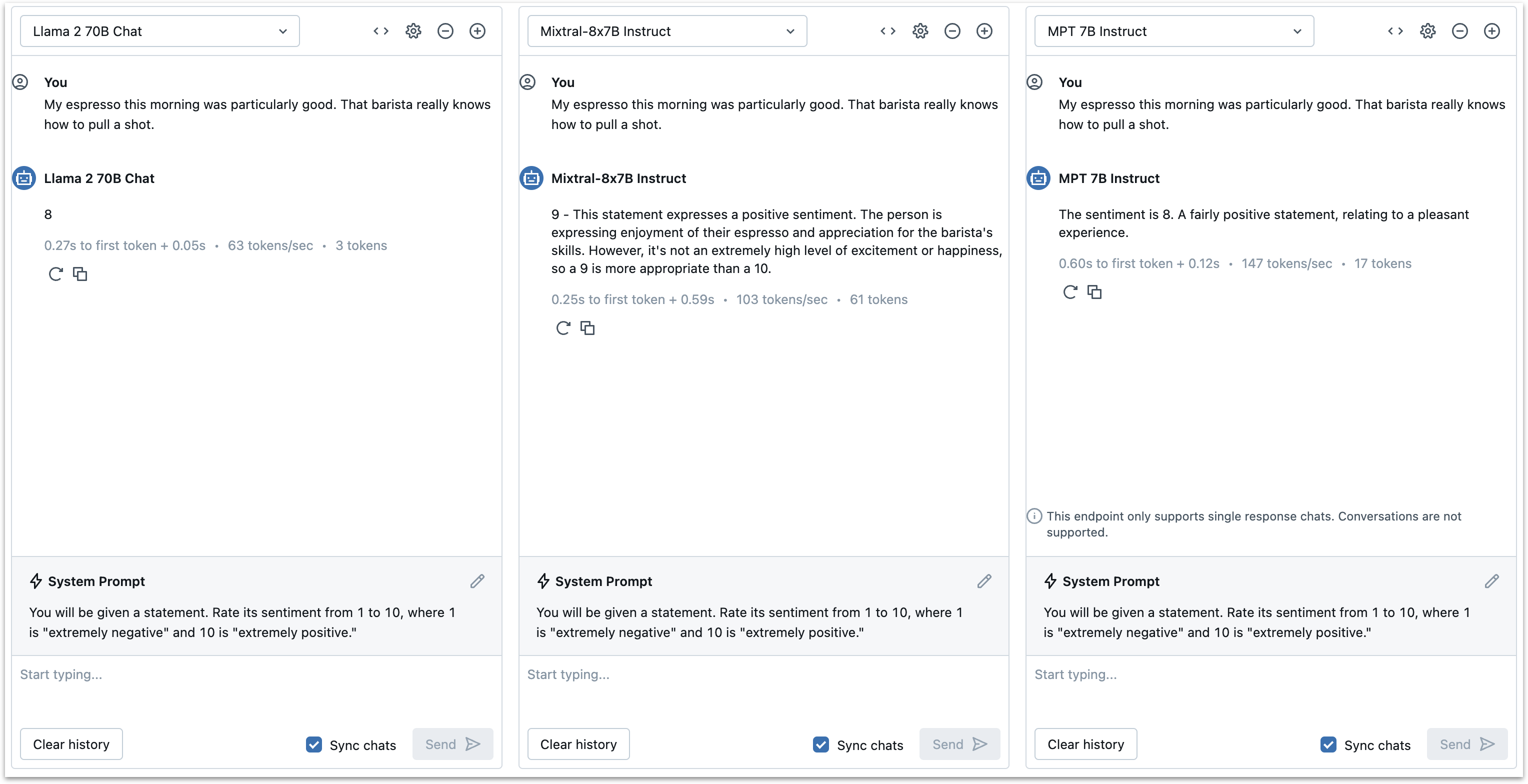

To compare multiple models, click the + button (“Add endpoint’) in the top right corner of the playground one or more times. As long as the “sync chats” checkbox is checked, the system prompt and user prompts will be synced across all the models. This provides a great way to compare model performance across tasks and across prompts. Uncheck the “sync chats” box to send different prompts to different models.

You can also use this feature to compare the same model with different generation parameters.

Generation Parameters#

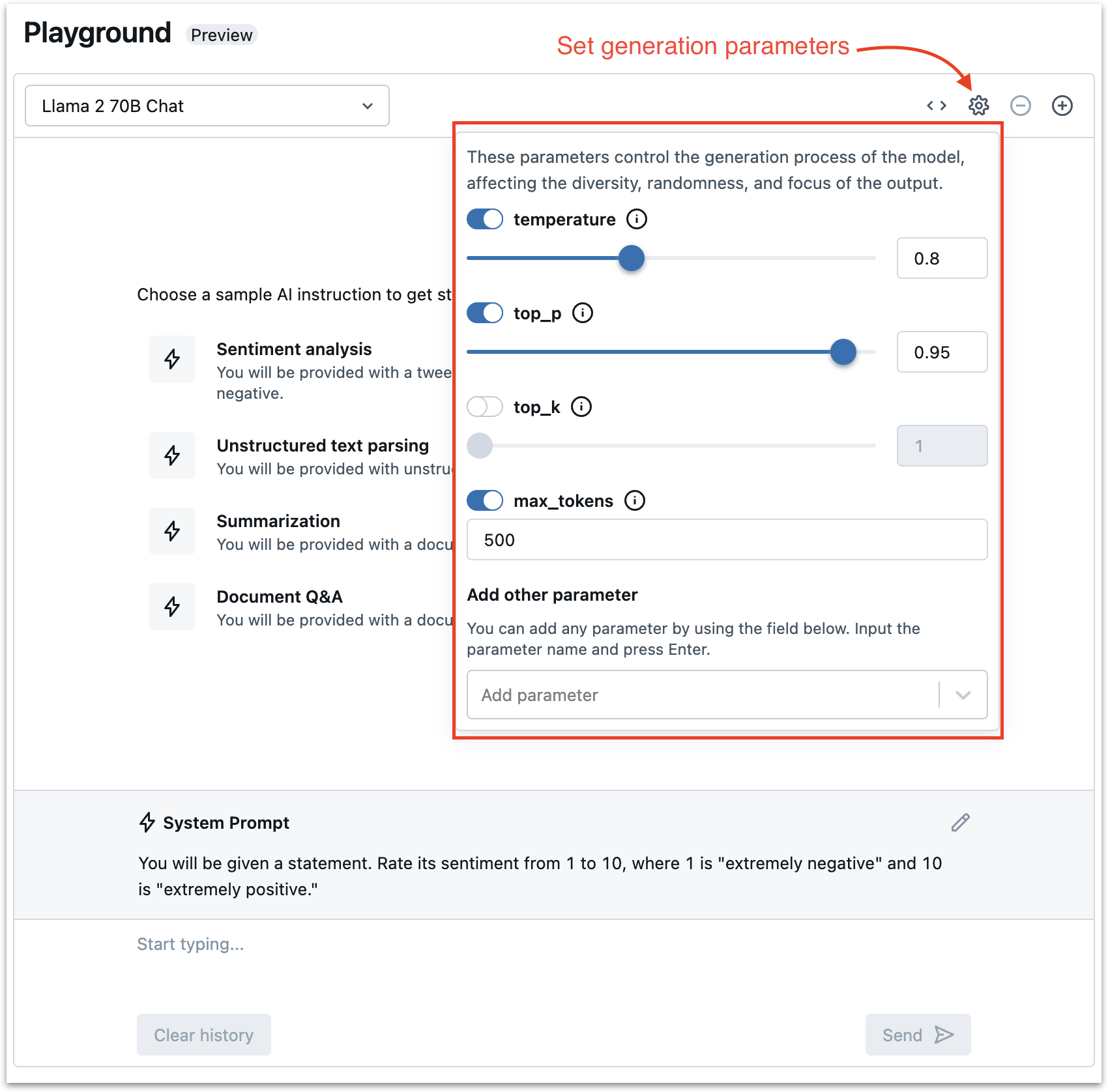

To set generation parameters such as temperature and max tokens, click the gear icon in the top right corner of the playground.

See Also#

The MLflow Prompt Engineering UI is another useful tool for comparing models and prompts. The prompt engineering UI is particularly useful for configuring prompt templates and comparing different models and and inputs using those templates. The AI playground, on the other hand, is more centered around the chat interface and is great for comparing chat and instruction models on a variety of tasks.